7.4. The Successive Over Relaxation (SOR) method#

The Successive Over Relaxation (SOR) method improves on the convergence rate of the Gauss-Seidel method by applying a weighting factor to the updated estimates to adjust the extent of the change. Let \(\omega\) be a relaxation parameter in the range \([0,2]\) then the SOR method is

Definition 7.5 (The Successive Over Relaxation (SOR) method)

The SOR method for solving a system of linear equations of the form \(A \mathbf{x} = \mathbf{b}\) is

where \(\omega \in [0, 2]\).

7.4.1. The iteration matrix for the SOR method#

The iteration matrix for the SOR method is derived by writing the coefficient matrix of the linear system \(A\mathbf{x}=\mathbf{b}\) using

Substituting into the linear system \(A \mathbf{x} = \mathbf{b}\) and rearranging

So the matrix form of the SOR method is

and the iteration matrix is

7.4.2. Under and over relaxation#

The value of \(\omega\) affects the rate of convergence of the SOR method and is determined by how the Gauss-Seidel method converges to the exact solution. Consider the diagrams in Fig. 7.4 and Fig. 7.5 below that shows the two types of convergence of the Gauss-Seidel method. When we have monotonic convergence where each successive iteration approaches the exact solution from a single side (Fig. 7.4), the rate of convergence is improved by using \(\omega > 1\) so that the change in subsequent approximations is increased. When we have oscillatory convergence where successive iterations overshoot the exact solution (Fig. 7.5) the rate of convergence is improved by using \(\omega < 1\) so that the change in the subsequent approximation is decreased.

Fig. 7.4 The estimated solution approaches the exact solution monotonically.#

Fig. 7.5 The estimated solution oscillates about the exact solution.#

7.4.3. Optimum value of the relaxation parameter#

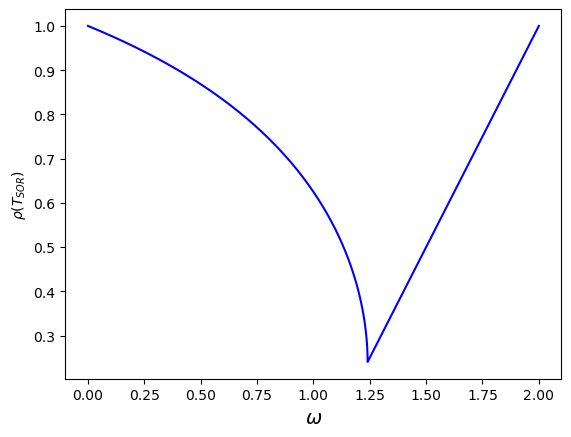

The optimum value of \(\omega\) will be the one that minimises the spectral radius of the iteration matrix. The iteration matrix will depend on the value of \(\omega\). One way to estimate the optimum value of \(\omega\) is to calculate \(\rho(T_{SOR})\) for values in the range \(\omega \in [0, 2]\) and choose the value of \(\omega\) which minimises this value. This has been done for the system of linear equations from Example 7.1 and the plot is shown in Fig. 7.6.

Fig. 7.6 Plot of the spectral radius \(\rho(T_{SOR})\) of the iteration matrix for the SOR method when used to solve the system of linear equations from Example 7.1.#

So an estimation of the optimum value of the relaxation parameters is \(\omega = 1.25\). If the coefficient matrix \(A\) has real eigenvalues we can use the following theorem to calculate the exact value of \(\omega\).

Theorem 7.2 (Optimum relaxation parameter)

If a system of linear equations of the form \(A\mathbf{x}=\mathbf{b}\) has a coefficient matrix \(A\) with all real eigenvalues then the optimum relaxation parameter for the SOR method can be calculated using

where \(\rho(T_J)\) is the spectral radius of the iteration matrix of the Jacobi method calculation using equation (7.4).

Example 7.4

Determine the optimum relaxation parameter for the SOR method when applied to the system of linear equations from Example 7.1 using equation (7.11).

Solution

We saw in Example 7.3 that the spectral radius of the iteration matrix for the Jacobi method for this system is \(\rho(T_J )=0.7906\).

Using equation (7.11)

Example 7.5

Solve the system of linear equations from from Example 7.1 using the SOR method with \(\omega = 1.24\) and an accuracy tolerance of \(tol = 10^{-4}\)

Solution

The SOR method for this system is

Using starting values of \(\mathbf{x} = \mathbf{0}\). Calculating the first iteration

Calculate the residual

Since \(\max(| \mathbf{r}^{(1)} |) = 6.190200 > 10^{-4}\) we continue iterating. Calculating the second iteration

Calculate the residual

Since \(\max(| \mathbf{r}^{(2)} |) = 0.989642 > 10^{-4}\) we continue iterating.

The SOR method was iterated until \(\max(|\mathbf{r}|) < 10^{-4}\) and the iteration values are given in the table below. Note that the SOR method took 9 iterations to achieve convergence to \(tol=10^{-4}\) whereas the Jacobi method took 49 iterations and the Gauss-Seidel method took 19 iterations to achieve the same accuracy.

\(k\) |

\(x_{1}\) |

\(x_{2}\) |

\(x_{3}\) |

\(\max( | \mathbf{r} | )\) |

|---|---|---|---|---|

0 |

0.000000 |

0.000000 |

0.000000 |

14.000000 |

1 |

-0.620000 |

-1.903400 |

3.749946 |

6.19e+00 |

2 |

1.298962 |

-2.068735 |

2.798705 |

9.90e-01 |

3 |

0.992173 |

-2.038626 |

3.036337 |

2.14e-01 |

4 |

1.037801 |

-2.014620 |

2.986747 |

1.07e-01 |

5 |

1.004524 |

-2.004807 |

3.001690 |

1.16e-02 |

6 |

1.003385 |

-2.001470 |

2.999139 |

9.13e-03 |

7 |

1.000555 |

-2.000430 |

3.000073 |

9.29e-04 |

8 |

1.000267 |

-2.000122 |

2.999944 |

7.01e-04 |

9 |

1.000050 |

-2.000034 |

3.000003 |

9.65e-05 |

7.4.4. Code#

The code below defines a function called sor() which solves a linear system of equations of the for \(A \mathbf{x} = \mathbf{b}\) using the SOR method with a relaxation parameter \(\omega\).

def sor(A, b, omega, tol):

n = len(b)

x = np.zeros(n)

for k in range(100):

for i in range(n):

sum_ = 0

for j in range(n):

if i != j:

sum_ += A[i,j] * x[j]

x[i] = (1 - omega) * x[i] + omega * (b[i] - sum_) / A[i,i]

r = b - np.dot(A, x)

if max(abs(r)) < tol:

break

return x

function x = sor(A, b, omega, tol)

n = length(b);

x = zeros(n, 1);

for k = 1 : 100

for i = 1 : n

sum_ = 0;

for j = 1 : n

if j ~= i

sum_ = sum_ + A(i,j) * x(j);

end

end

x(i) = (1 - omega) * x(i) + omega * (b(i) - sum_) / A(i,i);

end

r = b - A * x;

if max(abs(r)) < tol

break

end

end

end