6.5. QR decomposition#

QR decomposition is another decomposition method that factorises a matrix in the product of two matrices \(Q\) and \(R\) such that

where \(Q\) is an orthogonal matrix and \(R\) is an upper triangular matrix.

Definition 6.2 (Orthogonal vectors)

A set of vectors \(\lbrace \mathbf{v}_1 ,\mathbf{v}_2 ,\mathbf{v}_3 ,\dots \rbrace\) is said to be orthogonal if \(\mathbf{v}_i \cdot \mathbf{v}_j =0\) for \(i\not= j\). Furthermore the set is said to be orthonormal if \(\mathbf{v}_i\) are all unit vectors.

Definition 6.3 (Orthogonal matrix)

An orthogonal matrix is a matrix where the columns are a set of orthonormal vectors. If \(A\) is an orthogonal matrix if

For example consider the matrix

This is an orthogonal matrix since

Futhermore it is an orthonormal matrix since the magnitude of the columns for \(A\) are both 1.

6.5.1. QR decomposition using the Gram-Schmidt process#

Consider the \(3 \times 3\) matrix \(A\) represented as the concatenation of the column vectors \(\mathbf{a}_1, \mathbf{a}_2, \mathbf{a}_3\)

where \(\mathbf{a}_j = (a_{1j}, a_{2j}, a_{3j})\). To calculate the orthogonal \(Q\) matrix we need a set of vectors \(\{\mathbf{q}_1, \mathbf{q}_2, \mathbf{q}_3\}\) that span the same space as \(\{\mathbf{a}_1, \mathbf{a}_2, \mathbf{a}_3\}\) but are orthogonal to each other. One way to do this is using the Gram-Schmidt process. Consider the diagram shown in Fig. 6.1 that shows the first two non-orthogonal vectors \(\mathbf{a}_1\) and \(\mathbf{a}_2\).

Fig. 6.1 The Gram-Schmidt process#

We begin by letting \(\mathbf{u}_1 = \mathbf{a}_1\) and seek to find a vector \(\mathbf{u}_2\) that is orthogonal to \(\mathbf{u}_1\) and in the same span as \(\mathbf{a}_2\). To do this we subtract the vector projection of \(\mathbf{a}_2\) onto \(\mathbf{u}_1\), i.e.,

We can simplify things by normalising \(\mathbf{u}_1\) such that \(\mathbf{q}_1 = \dfrac{\mathbf{u}_1}{\|\mathbf{u}_1\|}\) so we have

which we also normalise to give \(\mathbf{q}_2 = \dfrac{\mathbf{u}_2}{\|\mathbf{u}_2\|}\). For the next vector \(\mathbf{a}_3\) we want the vector \(\mathbf{u}_3\) to be orthogonal to both \(\mathbf{q}_1\) and \(\mathbf{q}_2\) so we subtract both the vector projections of \(\mathbf{a}_3\) onto \(\mathbf{q}_1\) and \(\mathbf{q}_2\)

which is normalised to give \(\mathbf{q}_3\). Using \(\mathbf{u}_i = (\mathbf{q}_i \cdot \mathbf{a}_i) \mathbf{q}_i\) and rearranging (6.3) and (6.4) to make \(\mathbf{a}_i\) the subject and

The columns of the \(Q\) matrix are formed using the vectors \(\mathbf{q}_i\)

and the elements of the \(R\) matrix are formed from the dot products of the \(\mathbf{q}_i\) and \(\mathbf{a}_j\) vectors such that

Positive diagonal elements of \(R\)#

It has become standard convention to impose a condition that the diagonal elements of \(R\) must be positive so that we get a unique factorisation. Consider \(A = QR\) then multiplying a column of \(Q\) by \(-1\) and the corresponding row of \(R\) by \(-1\) doesn’t change the product \(QR\). So if any of the diagonal elements of \(R\) are negative we multiply the corresponding column of \(Q\) and row of \(R\) by \(-1\).

Algorithm 6.7 (QR decomposition using the Gram-Schmidt process)

Inputs: An \(m \times n\) matrix \(A\).

Outputs: An \(m \times n\) orthogonal matrix \(Q\) and and \(n \times n\) upper triangular matrix \(R\) such that \(A = QR\).

For \(j = 1, \ldots, n\) do

For \(i = 1, \ldots, j - 1\) do

\(r_{ij} \gets \mathbf{q}_i \cdot \mathbf{a}_j\)

\(\mathbf{u}_j \gets \mathbf{a}_j - \displaystyle\sum_{i=1}^{j-1} r_{ij} \mathbf{q}_i\)

\(r_{jj} \gets \| \mathbf{u}_j \|\)

\(\mathbf{q}_j \gets \dfrac{\mathbf{u}_j}{r_{jj}}\)

\(Q \gets (\mathbf{q}_1, \mathbf{q}_2, \ldots, \mathbf{q}_2)\)

For \(i = 1, \ldots, n\) do

If \(r_{ii} < 0\) then

\(R(i,: ) \gets -R(i,:)\)

\(Q(:,i) \gets -Q(:,i)\)

Return \(Q\) and \(R\)

Example 6.8

Calculate the QR decomposition of the following matrix using the Gram-Schmidt process

Solution

The diagonal elements of \(R\) are all positive so we don’t need to make any adjustments. Thus the \(Q\) and \(R\) matrices are given by

We can check that this is correct by verifying that \(A = QR\) and that \(Q^\mathsf{T}Q = I\).

Code#

The code below defines a function called qr_gramschmidt() which calculates the QR decomposition of a matrix A using the Gram-Schmidt process.

def qr_gramschmidt(A):

n = A.shape[1]

Q, R = np.copy(A).astype(float), np.zeros((n,n))

for j in range(n):

for i in range(j):

R[i,j] = np.dot(Q[:,i], A[:,j])

Q[:,j] = Q[:,j] - R[i,j] * Q[:,i]

R[j,j] = np.linalg.norm(Q[:,j])

Q[:,j] = Q[:,j] / R[j,j]

for i in range(n):

if R[i,i] < 0:

R[i,:] *= -1

Q[:,i] *= -1

return Q, R

function [Q, R] = qr_gramschmidt(A)

[~, n] = size(A);

Q = A;

R = zeros(n, n);

for j = 1 : n

for i = 1 : j - 1

R(i,j) = dot(Q(:,i), A(:,j));

Q(:,j) = Q(:,j) - R(i,j) * Q(:,i);

end

R(j,j) = norm(Q(:,j));

Q(:,j) = Q(:,j) / R(j,j);

end

for i = 1 : n

if R(i,i) < 0

R(i,:) = -R(i,:);

Q(:,i) = -Q(:,i);

end

end

end

6.5.2. QR decomposition using Householder reflections#

Another method we can use to calculate the QR decomposition of a matrix is by the use of Householder reflections.

Definition 6.4 (Householder matrix)

The Householder matrix is an orthogonal reflection matrix of the form

where \(\mathbf{v}\) is a non-zero column vector. The matrix \(H\) is symmetric and orthogonal.

Fig. 6.2 The vector \(\mathbf{x}\) is reflected about the dashed line so that it is parallel to the basis vector \(\mathbf{e}_1\).#

Consider the diagram in Fig. 6.2 where the vector \(\mathbf{x}\) is reflected about the hyperplane represented by the dashed line so that it is parallel to the first basis vector of the standard basis \(\mathbf{e}_1 = (1, 0, \ldots, 0)^\mathsf{T}\). The Householder matrix that achieves this reflection is written in terms of the vector \(\mathbf{v}\) which is orthogonal to the dashed line and calculated using

If the magnitude of \(\mathbf{x}\) is close to being parallel to \(\mathbf{e}_1\) then \(\mathbf{v}\) will be close to \(\mathbf{0}\) and the calculation of (6.5) will involve the division by a number close to zero. To overcome this we use a Householder transformation to reflect \(\mathbf{x}\) so that it is parallel to \(-\mathbf{e}_1\) instead of \(+\mathbf{e}_1\).

Fig. 6.3 The vector \(\mathbf{x}\) is reflected about the dashed line so that it is parallel to the basis vector \(-\mathbf{e}_1\).#

To do this we calculate \(\mathbf{v}\) by adding \(\| \mathbf{x} \| \mathbf{e}_1\) to \( \mathbf{x}\) when the sign of \(x_1\) is negative, i.e.,

where \(\operatorname{sign}(x)\) returns \(+1\) if \(x \geq 0\) and \(-1\) if \(x < 0\). To compute the QR decomposition of a matrix \(A\), we reflect the first column of \(A\), using Householder reflection so that it is parallel to \(\mathbf{e}_1\). This means that \(r_{11}\) is the only non-zero element in the first column of \(R\).

We then look to do the same for the sub-matrix of \(R\) formed by omitted its first row and column. We do this by calculating a \((m-1) \times (m-1)\) Householder matrix \(H'\) using the second column of \(R\) starting from the diagonal element, i.e., \(\mathbf{x} = (b_{22}, b_{32}, \ldots, b_{m2})^\mathsf{T}\). This is used to form the \(m \times m\) Householder matrix \(H_2\) by padding out the first row and column of the identity matrix.

The Householder matrix \(H_2\) is then applied to \(R\) so that the elements of the second column below the diagonal are zeroed out.

We repeat this process until column \(j = m - 1\) when \(R\) will be an upper triangular matrix. If \(A\) has \(n\) columns then

rearranging gives

The Householder matrices are symmetric and orthogonal so \(H^{-1} = H\) and since we want \(A = QR\) then

Algorithm 6.8 (QR decomposition using Householder reflections)

Inputs: An \(m \times n\) matrix \(A\).

Outputs: An \(n \times n\) orthogonal matrix \(Q\) and and \(n \times n\) upper triangular matrix \(R\) such that \(A = QR\).

\(Q \gets I_m\)

\(R \gets A\)

For \(j = 1, \ldots, m - 1\) do

\(\mathbf{x} \gets\) column \(j\) of \(R\) starting at the \(j\)-th row

\(\mathbf{v} \gets \mathbf{x} - \operatorname{sign} (x_1) \|\mathbf{x}\|\mathbf{e}_1\)

\(H' \gets I_{m-j+1} - 2 \dfrac{\mathbf{v} \mathbf{v} ^\mathsf{T}}{\mathbf{v}^\mathsf{T} \mathbf{v}}\)

\(H(j:m, j:m) \gets H'\) with the first \(j-1\) rows and columns of \(H\) set to those of \(I_m\)

\(R \gets H R\)

\(Q \gets Q H\)

For \(i = 1, \ldots, n\) do

If \(R(i,i) < 0\) then

\(R(i,: ) \gets -R(i,:)\)

\(Q(:,i) \gets -Q(:,i)\)

Return \(Q\) and \(R\).

Example 6.9

Calculate the QR decomposition of the following matrix using Householder reflections

Solution

Set \(Q = I\) and \(R = A\). We want to zero out the elements below \(R(1,1)\) so \(\mathbf{x} = (1, 2, 2)^\mathsf{T}\) and \(\|\mathbf{x}\| = 3\).

The second diagonal element of \(R\) is negative, so we multiply row 2 of \(R\) and column 2 of \(Q\) by \(-1\)

We can check whether this is correct by verifying that \(A = QR\) and \(Q^\mathsf{T} Q = I\).

Code#

The code below defines a function called qr_householder() that calculates the QR decomposition of an \(m \times n\) matrix \(A\) using Householder reflections. This has been used to calculate the QR decomposition of the matrix from Example 6.9.

def qr_householder(A):

m, n = A.shape

Q, R = np.eye(m), np.copy(A).astype(float)

for i in range(m - 1):

x = R[i:,i]

v = np.array([x + np.sign(x[0]) * np.linalg.norm(x) * np.eye(m - i)[:,0]]).T

H = np.eye(m)

H[i:,i:] -= 2 * np.dot(v, v.T) / np.dot(v.T, v)

R = np.dot(H, R)

Q = np.dot(Q, H)

for i in range(n):

if R[i,i] < 0:

R[i,:] *= -1

Q[:,i] *= -1

return Q, R

function [Q, R] = qr_householder(A)

[m, n] = size(A);

Q = eye(m);

R = A;

for i = 1 : m - 1

x = R(i:end,i);

if sign(x(1)) > 0

v = x + norm(x) * [1 ; zeros(m-i,1)];

else

v = x - norm(x) * [1 ; zeros(m-i,1)];

end

H = eye(m);

H(i:end,i:end) = H(i:end,i:end) - 2 * v * v' / dot(v, v);

R = H * R;

Q = Q * H;

end

for i = 1 : n

if R(i,i) < 0

R(i,:) = -R(i,:);

Q(:,i) = -Q(:,i);

end

end

end

6.5.3. Loss of orthogonality#

Like any numerical technique, the QR decomposition methods shown here are prone to computational rounding errors. If \(Q\) is perfectly orthogonal then \(Q^\mathsf{T}Q = I\), but if there is bound to be some small error. If we let this error be \(E\) then \(Q^\mathsf{T}Q = I + E\). Rearranging gives

which is known as the Frobenius norm and is a measure of the loss of orthogonality.

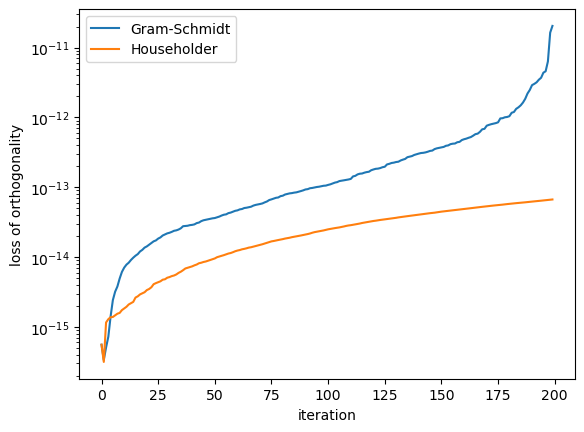

The both QR decomposition methods shown here use an iterative approach where we orthogonalised the columns of the \(A\) matrix in turn based upon the previous iterations. So an error in column \(i\) will have a knock on effect on all subsequent columns. To analyse the methods, the QR decomposition of a random \(200 \times 200\) matrix \(A\) was computed using the Gram-Schmidt process and Householder reflections. The Frobenius norm was calculated for the first \(n\) columns of \(Q\) where \(n = 1 \ldots 200\) giving the loss of orthogonality for those iterations and plotted in below.

Here we can see that computing QR decomposition using Householder reflections is less prone to orthogonality errors than using the Gram-Schmidt process.