1.2. Error analysis#

In Example 1.1 in the previous section we solved the following IVP using the Euler method

This IVP is quite simple we can use analytical methods to determine the solution which is \(y = e^{t^2/2}\). Since we have an exact solution we can calculate the absolute error between the numerical solution and the exact solution using

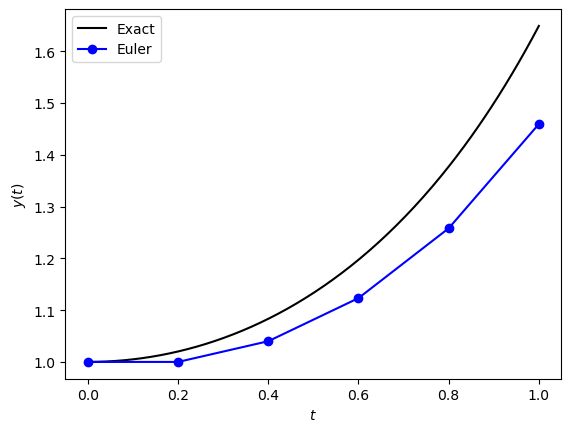

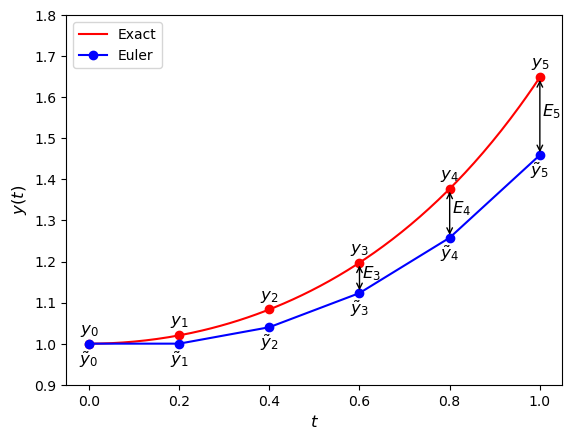

The numerical solution to this IVP computed using the Euler method, the exact solution for the same \(t\) values used in the Euler method and the absolute error are tabulated below, and the solutions plotted in Fig. 1.5.

\(t\) |

Euler |

Exact |

Error |

|---|---|---|---|

0.0 |

1.000000 |

1.000000 |

0.000000 |

0.2 |

1.000000 |

1.020201 |

0.020201 |

0.4 |

1.040000 |

1.083287 |

0.043287 |

0.6 |

1.123200 |

1.197217 |

0.074017 |

0.8 |

1.257984 |

1.377128 |

0.119144 |

1.0 |

1.459261 |

1.648721 |

0.189460 |

Fig. 1.5 Comparisons between the Euler method solutions and the exact solutions for the IVP \(y'=ty\), \(t\in[0,1]\), \(y(0)=1\).#

It is clear that the numerical solution computed using the Euler method becomes increasingly inaccurate as \(t\) increases.

1.2.1. Changing the step length#

Analysing the error of a numerical method is a challenge since in most cases we do not know the exact solution. Instead, we study the behaviour of the error as the step length changes. Recall the Euler method

The solution over a single time step is changed from the current solution \(y_n\) by \(h\) multiplied by the value of \(f(t_n, y_n)\). If we assume that that value of \(y_n\) has some error associated to it then the error will also be changed by \(h\) multiplied by some value. Therefore, the smaller the value of \(h\) then the smaller the change in the error converging to zero when \(h\) is infinitesimally small.

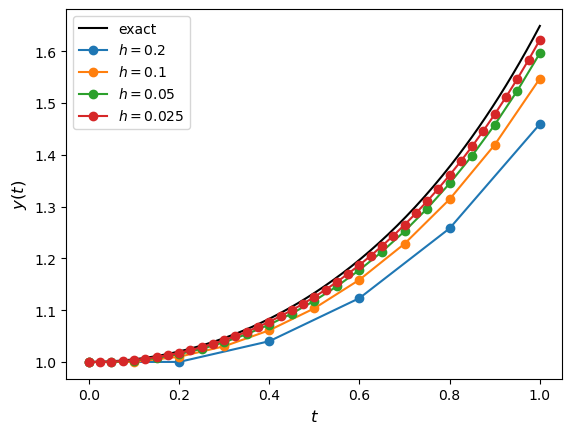

We can demonstrate this by looking at the solutions to the initial value problem from Example 1.1 using different values of the step length \(h\). The numerical solutions using \(h=0.2, 0.1, 0.05, 0.005\) have been plotted against the exact solution in Fig. 1.6.

Fig. 1.6 Solutions to the IVP \(y'=ty\), \(t \in [0,1]\), \(y(0)=1\) using the Euler method with step lengths \(h=0.2, 0.1, 0.05, 0.005\).#

Here we can see that as the step length is reduced, the solution gets closer to the exact solution as expected. The absolute errors between the exact solution \(y(1) = e^{\frac{1}{2}}\) and the Euler method solution calculated using the different step lengths are tabulated below.

\(h\) |

Error |

|---|---|

0.200 |

0.1895 |

0.100 |

0.1016 |

0.050 |

0.0528 |

0.025 |

0.0269 |

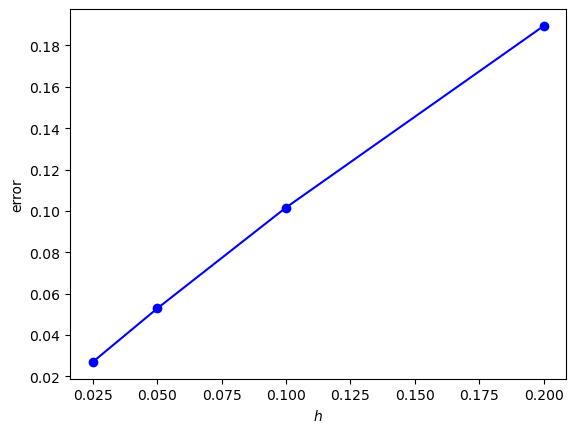

This shows that as the step length is halved, the error decreases by a factor of approximately one half. This behaviour can be seen in Fig. 1.7 where the absolute errors between the numerical solutions for \(y(1)\) and the exact solution is plotted against the step length.

Fig. 1.7 Solutions to the IVP \(y'=ty\) using step lengths \(h=0.2, 0.1, 0.05, 0.005\).#

1.2.2. Big-O notation#

We saw in Fig. 1.7 that has the step length \(h\) decreases, the error tends to zero. Different numerical methods will converge to zero at different rates, and it is advantageous for us to use methods that converge raster so that we have more accurate solutions. Since in most cases we do not know the exact solution and therefore cannot calculate the errors, we compare the accuracy of different methods using the expected rate of convergence to zero depending on the step length \(h\), which is known as big-O notation.

Definition 1.4 (Big-O notation)

Let \(f(h)\) be a function then if \(f(h) = O(h^n)\)

for some positive constant \(C\).

If a function \(f(h)\) is \(f(h) = O(h^n)\) then this means \(f(h)\) tends to zero at least as fast as \(h^n\). For example, if \(f(h) = O(h)\) then, if we halve \(h\) then we would expect \(f(h)\) to also be halved since \((\frac{1}{2})^1 = \frac{1}{2}\) (known as linear convergence), however if \(f(h) = O(h^2)\) then, if we halve \(h\) then the value of \(f(h)\) would decrease by a factor of \(\frac{1}{4}\) since \((\frac{1}{2})^2 = \frac{1}{4}\) (known as quadratic convergence). So the higher the power of \(h\) the quicker the function \(f(h)\) converges to zero as \(h\) decreases.

Since the error of a numerical method is dependent upon the step length \(h\) then we can say that it behaves like a polynomial function, and we can approximate the error using \(f(h) = O(h^n)\).

Definition 1.5 (Order of a method)

If the error of a numerical method is \(O(h^n)\) then the method is said to be of order \(n\).

In general, the higher the order of a method, the more accurate the solution will be when using the same step length \(h\).

1.2.3. Local truncation Error#

The local truncation error, denoted by \(e_n\), is the error over a single step of a method assuming that the solution at the previous step is exact. In the derivation of the Euler method we made the assumption that the computed value of \(y_{n+1}\) is an approximation of the exact solution \(y(t_{n+1})\). The local truncation error \(e_{n+1}\) is the difference between \(y_{n+1}\) and \(y(t_{n+1})\)

Substituting the Euler method solution \(y_{n+1} = y_n + h f(t_n, y_n)\) gives

Since \(y(t_{n+1}) = y(t_n + h)\), we can expand \(y(t_{n+1})\) about \(t_n\) using the Taylor series

where \(\tilde{t_n}\) is some point between \(t_{n}\) and \(t_{n} + h\). Since \(y'(t_n) = f(t_n, y_n)\), substituting equation (1.4) into equation (1.3) gives

So the local truncation error for the Euler method is proportional to \(h^2\). The actual value of the local truncation error is dependent on the value of \(y''(\tilde{t_n})\) so will change depending on the solution to the ODE and the value of \(t\). Assuming that the functions \(f\) and its partial derivatives \(f_t\) and \(f_y\) are bounded, we can introduce a constant \(M\) such that

This means that the worst possible truncation error for the Euler method is \(\dfrac{M h^2}{2}\).

1.2.4. Global truncation error#

The global truncation error denoted by \(E_n\), is the accumulation of the local truncation errors for the steps of the method to compute that solution from \(t=t_0\) up to \(t = t_n\) (Fig. 1.8).

Fig. 1.8 The global truncation errors \(E_n\) for the Euler method solution to the IVP \(y' = ty\), \(t\in [0, 1]\), \(y(0)= 1\) using a step length of \(h = 0.2\).#

We saw that the local truncation error at each step is at most \(\dfrac{Mh^2}{2}\) then after \(n\) steps the upper bound of the global truncation error is at most \(\dfrac{nMh^2}{2}\). Using a constant step length \(h\) then

so the upper bound of the global truncation error is

If \(C = (t_n - t_0 ) M / 2\) is some positive constant then \(E_n \leq C h\) so we say that \(E_n = O(h)\). So as the step length \(h\) decreases, the global truncation error for Euler’s method will converge to zero in a linear fashion, that is, halving the step length should also approximately halve the global truncation error. This confirms what we saw in the plot of the errors against step length for the Euler method (Fig. 1.7) where the errors formed a line with a gradient of approximately 1.