2.7. Adaptive step size control#

The implementations of the Runge-Kutta methods that we have used previously in this chapter have used a constant value of the step length \(h\). Using a fixed step length does not allow the method to take advantage of increasing the step length where the behaviour of the solution allows. Adaptive step size control is a method that attempts to control the value of the step length based on accuracy requirements. The accuracy of the approximation to the solution to the ODE is dependent upon three factors

the order of accuracy of the computational method;

the size of the step length used to advance the solution;

the behaviour of the solution.

Improving the order of accuracy of the method is often not straightforward and can place restrictions on the applicability of the method. Reducing the step length used will improve the accuracy of the approximation but since more steps are required to advance through the domain, this will increase the computational cost. The behaviour of the solution will have an effect on the accuracy of the approximation because computational methods are more accurate where the solution is slowly varying and less accurate where there are rapid variations in the solution.

For each step of the algorithm we compute two solutions of order \(p\) and \(p+1\) and calculate the difference between the two solutions. If this difference is within a desired accuracy tolerance, then the step is considered to be successful and the algorithm proceeds onto the next step and the step length is increased. If this is not the case then the step is considered to have failed and is repeated using a smaller step length.

2.7.1. Absolute and relative tolerances#

When determining whether a solution step is accurate enough we need to consider the size of the values involved. Consider the case where we are applying a population model where the solution values are expected to be large. Let’s say that the solutions for a step are \(y^{(p)} = 1000000\) for the order \(p\) method and \(y^{(p+1)} = 1000100\) for the order \(p + 1\) method. If we were to use the absolute difference the two solutions then we have

So if we set our accuracy tolerance to \(10^{-3}\) the step would consider to have failed and we would repeat the step. However, if we calculate the difference between \(y^{(p+1)}\) relative to \(y^{(p)}\), known as the relative difference, we have

which is less than \(10^{-3}\) and the step would consider to be successful.

Now consider a case where we are applying a chemical kinetics model where the solution values are expected to be very small. If the solutions for a step are \(y^{(p)} = 1 \times 10^{-6}\) and \(y^{(p+1)} = 2\times 10^{-6}\) then relative difference is

which is a lot greater than the accuracy tolerance of \(10^{-3}\) and the step would consider to have failed. But the absolute difference is

which is a lot less than the accuracy tolerance and the step would consider to be successful.

So when the values of the solution are large we use the relative difference between the two solutions, and when the values of the solution are small we use the absolute difference. But we want an algorithm that can deal with both cases. To do this we use two accuracy tolerances, \(atol\) and \(rtol\) for the absolute and relative differences respectively such that \(atol < rtol\), and compare the absolute difference between the two solutions so that a step is considered to have been successful if

Using \(atol = 10^{-6}\) and \(rtol = 10^{-3}\) for the population model example we have

Since \(100 < 1000.100001\) the step is considered to be successful. Alternatively, for the chemical kinetics example we have

and since \(10^{-6} < 1.002\times 10^{-6}\) the step is considered to be successful.

2.7.2. Calculating the new step length#

At each step in the method we will be adjusting the value of the step length. If a step has failed we want to reduce the value of the step length so that the solution is more accurate. If a step is successful this indicates the step length used could have been larger, so we want to increase it but not too much as to result in a failed step in the next step.

Let \(y^{(p)}\) and \(y^{(p+1)}\) denote the numerical solutions calculated using the order \(p\) and \(p+1\) method respectively and \(y\) denote the exact solution then

Subtracting the second equation from the first gives

If we let \(\Delta = |y^{(p)} - y^{(p+1)}|\) and since \(O(h^{p+1}) + O(h^{p+2}) = O(h^{p+1})\) (the larger error dominates the smaller one) then by the definition of \(O(h^n)\)

We want to calculate a new step length \(h_{new}\) which results in a desired accuracy tolerance \(tol\), i.e.,

and defining a ratio \(r\) between the new step length and current step length, \(r = h_{new} / h\), then

So when \(\Delta > tol\), \(r < 1\) and the step length is decreased, and when \(\Delta < tol\), \(r > 1\) and the step length is increased. To prevent the step length being decreased too much we limit the value of \(r\) to 0.1 so that the step length is decreased by a maximum of one tenth each step (the value of 0.1 is arbitrary). Also, we limit the amount by which the step length is increased by a safety factor of 0.8 to prevent the future step failing.

We can use the value of \(rtol\) to give us an approximation for the initial value of \(h\)

2.7.3. Embedded Runge-Kutta methods#

German mathematician Erwin Fehlberg (1911 - 1990) derived a Runge-Kutta method where different weightings applied to the stage values can result in a method of fourth or fifth-order without having to repeat the calculations of the ODE function. Since Fehlberg’s original method, several other Runge-Kutta methods that use the same stage values for producing solutions of different orders have been suggested and these methods are known as embedded Runge-Kutta methods.

The Butcher tableau for an embedded Runge-Kutta method takes the form

Where the weights \(b_i\) and \(b_i^*\) that give an order \(p+1\) and \(p\) method respectively, i.e.,

where \(k_i\) are the same for both equations. Examples of embedded Runge-Kutta methods are the Bogacki-Shampine 3(2) method and Fehlberg’s 5(4) method.

Definition 2.3 (Bogacki-Shampine 3(2) Runge-Kutta method (RK23))

Bogacki and Shampine [1989]

Definition 2.4 (Fehlberg’s order 5(4) Runge-Kutta method (RKF45))

Fehlberg [1969]

Algorithm 2.2 (Solving an IVP using a single step method with adaptive step size control)

Inputs A system of first-order ODEs of the form \(\mathbf{y}' = f(t,\mathbf{y})\), the domain \(t \in [t_0, t_{\max}]\), the initial values \(\mathbf{y}(t_0) = \mathbf{y}_0\), and desired accuracy tolerances \(atol\) and \(rtol\).

Outputs \((t_0, t_1, \ldots)\) and \((\mathbf{y}_0, \mathbf{y}_1, \ldots)\).

\(h \gets 0.8 \cdot rtol^{^\frac{1}{p+1}}\)

\(n \gets 0\)

While \(t_n < b\)

Calculate the \(\mathbf{y}^{(p+1)}\) and \(\mathbf{y}^{(p)}\) solutions

\(\Delta \gets \| \mathbf{y}^{(p+1)} - \mathbf{y}^{(p)} \|\)

\(tol \gets atol + rtol \cdot \min (| \mathbf{y}^{(p+1)} |)\)

If \(\Delta < tol\)

\(\mathbf{y}_{n+1} \gets \mathbf{y}^{(p+1)}\)

\(t_{n+1} \gets t_n + h\)

\(n \gets n + 1\)

\(r \gets \max \left(0.1, 0.8 \left( \frac{tol}{\Delta} \right) ^{1/(p+1)} \right)\)

\(h \gets \min(h, t_{\max} - t_n)\)

Return \((t_0, t_1, \ldots)\) and \((\mathbf{y}_0, \mathbf{y}_1, \ldots)\)

Example 2.8

Use Bogacki-Shampine method to solve the following IVP using accuracy tolerances of \(atol = 10^{-6}\) and \(rtol = 10^{-3}\)

Solution

Here \(f(t, y) = e^{t - y\sin(y)}\), \(t= 0\) and \(y_0 = 0\). Calculating the initial step length gives

Calculating the first step of the Bogacki-Shampine 3(2) method gives

Calculate \(\Delta\) and \(tol\)

so \(\Delta < tol\) and the step has been successful. Calculating the new value of \(h\)

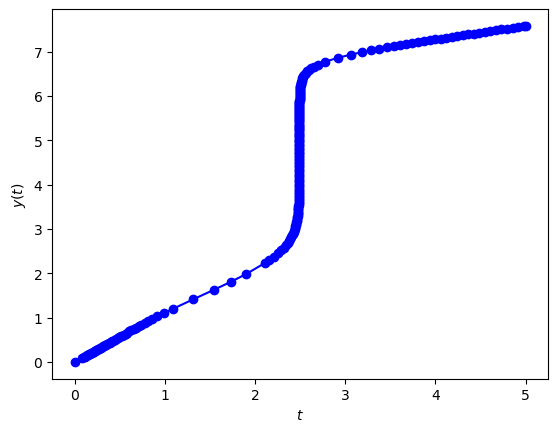

The solution over the full domain \(t\in [0, 5]\) is shown in Fig. 2.7.

Fig. 2.7 Bogacki-Shampine 3(2) method solution to the initial value problem \(y' = -21y + e^{-t}\), \(t\in [0,1]\), \(y(0)=0\) with an initial step length \(h=0.1\) and an accuracy tolerance \(tol=10^{-4}\).#

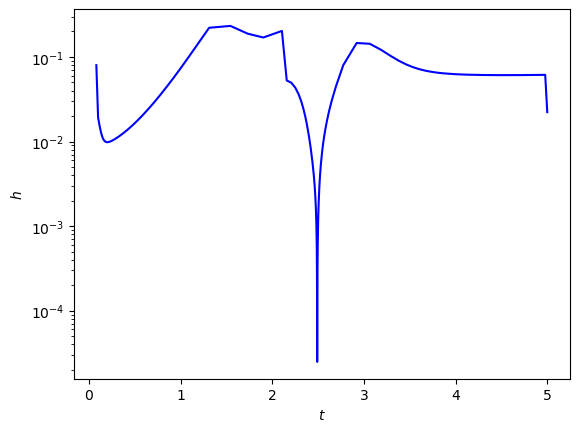

A plot of the step lengths used across the domain is shown in Fig. 2.8. Note how the step lengths decrease where the solution is steeper and increase where it is more slowly varying.

Fig. 2.8 Bogacki-Shampine 3(2) method solution to the initial value problem \(y' = -21y + e^{-t}\), \(t\in [0,1]\), \(y(0)=0\) with an initial step length \(h=0.1\) and an accuracy tolerance \(tol=10^{-4}\).#

2.7.4. Code#

The code below defines the function rkf23() which computes the solution to an initial value problem using the Bogacki-Shampine 3(2) embedded Runge-Kutta method. Since we do not know beforehand how many steps will be required, we assume the number steps will be large and use a while loop instead of a for loop for stepping through the solution.

def rk23(f, tspan, y0, atol=1e-6, rtol=1e-3):

m = len(y0)

t = np.zeros(100000)

y = np.zeros((100000, m))

t[0] = tspan[0]

y[0,:] = y0

h = 0.8 * rtol ** (1 / 3)

n = 0

while t[n] < tspan[-1]:

k1 = f(t[0], y[0,:])

k2 = f(t[n] + 1/2 * h, y[n,:] + 1/2 * h * k1)

k3 = f(t[n] + 3/4 * h, y[n,:] + 3/4 * h * k2)

k4 = f(t[n] + h, y[n,:] + h * (2/9 * k1 + 1/3 * k2 + 4/9 * k3))

y3 = y[n,:] + h * (2/9 * k1 + 1/3 * k2 + 4/9 * k3)

y2 = y[n,:] + h * (7/24 * k1 + 1/4 * k2 + 1/3 * k3 + 1/8 * k4)

delta = np.linalg.norm(y3 - y2)

tol = atol + rtol * np.min(np.abs(y3))

if delta < tol:

y[n+1,:] = y3

t[n+1] = t[n] + h

n += 1

r = max(0.1, 0.8 * (tol / delta) ** (1/3))

h = min(r * h, tspan[-1] - t[n])

return t[:n+1], y[:n+1,:]

function [t, y] = rk23(f, tspan, y0, atol, rtol)

m = length(y0);

t = zeros(100000, 1);

y = zeros(100000, m);

t(1) = tspan(1);

y(1,:) = y0;

h = 0.8 * rtol ^ (1/3);

n = 1;

while t(n) < tspan(2)

k1 = f(t(n), y(n,:));

k2 = f(t(n) + 1/2 * h, y(n,:) + 1/2 * h * k1);

k3 = f(t(n) + 3/4 * h, y(n,:) + 3/4 * h * k2);

k4 = f(t(n) + h, y(n,:) + h * (2/9 * k1 + 1/3 * k2 + 4/9 * k3));

y3 = y(n,:) + h * (2/9 * k1 + 1/3 * k2 + 4/9 * k3);

y2 = y(n,:) + h * (7/24 * k1 + 1/4 * k2 + 1/3 * k3 + 1/8 * k4);

delta = norm(y3 - y2);

tol = atol + rtol * min(abs(y3));

if delta < tol

y(n+1,:) = y3;

t(n+1) = t(n) + h;

k1 = k4;

n = n + 1;

end

r = max(0.1, 0.8 * (tol / delta) ^ (1/3));

h = min(r * h, tspan(2) - t(n));

end

t(n+1:end) = [];

y(n+1:end,:) = [];

end