3.3. Solving Systems of ODEs using IRK Methods#

Now that we know have to derive IRK methods we now move onto implementing them to solve IVPs. The stage values for a general 4-stage explicit Runge-Kutta method are

We have seen that it is straightforward to compute the stages sequentially since the value of \(k_i\) only depends on the values of \(k_1, \ldots, k_{i-1}\). The stage values for a 4-stage implicit Runge-Kutta method are

Here is it not straightforward to compute the stage values since the value of \(k_i\) depends upon the values of all stage values \(k_1, \ldots, k_s\). When using an implicit method we need to compute the values of \(k_i\) by solving a system of non-linear equations.

Consider the solution of the IVP of the form

If there are \(N\) equations in the system then

Recall that the general form of a Runge-Kutta method for solving a system of ODEs is

To simplify the calculations to for implicit methods we introduce a vector \(\mathbf{z}_i\), such that

and the expression for the stage values \(\mathbf{k}_i\) becomes

Substituting this into equation (3.3) we have

and also into equation (3.2) gives

Using an \(s\)-stage IRK method, the values of \(\mathbf{z}_i\) given by equation (3.4) are

Writing this as a matrix equation

Since \(\mathbf{z}_i\) and \(f(t_n + c_ih, \mathbf{y}_n + \mathbf{z}_i)\) are \(N \times 1\) vectors then these matrices are \(Ns \times 1\). We can replace the summation with matrix multiplications using a Kronecker product.

Definition 3.4 (The Kronecker product)

The Kronecker product between an \(m \times n\) matrix \(A\) and another \(p \times q\) matix \(B\) is denoted by \(A \otimes B\) and defined by

For example, if we have a 2-stage method so that \(s = 2\) and a system of \(N = 3\) ODEs

Introducing a notation where \(z_{i,j}\) and \(f_{i,j}\) are the \(j\)-th elements of \(\mathbf{z}_i\) and \(f(t_n + c_ih, \mathbf{y}_n + \mathbf{z}_i)\) respectively, then this can be written as

The \(6\times 6\) matrix is \(A \otimes I_N\) so we can write the expression for the stage values \(\mathbf{z}_i\) as

where

We can also use a Kronecker product to simplify the expression for \(\mathbf{y}_{n+1}\) from equation (3.5)

3.3.1. Newton’s Method#

The calculation of the stage values \(\mathbf{z}_i\) using equation (3.8) requires the solution of a system of implicit equations. This is done using Newton’s method which is a well known numerical method to computing the solutions of \(g(x) = 0\) where \(g\) is a differentiable function. Newton’s method is written as

where \(x^{(k)}\) and \(x^{(k+1)}\) are current and improved estimates of the solution \(x\). The implementation of Newton’s method begins with a guess value of \(x^{(0)}\), and equation (3.10) is iterated until two successive estimates agree to some predefined tolerance.

Given a system of equations \(g(\mathbf{x}) = \mathbf{0}\) where \(g = (g_1, g_2, \ldots)\) are differentiable functions, then the matrix form of Newton’s method is

where \(J\) is the Jacobian matrix

A problem we have here is that the calculation of the inverse \(J^{-1}\) is an expensive calculation. So to avoid calculating a matrix inverse we use a two-stage process, let \(\Delta \mathbf{x} = \mathbf{x}^{(k+1)} - \mathbf{x}^{(k)}\) then equation (3.11) can be written as

which is a linear system that can be solved to give \(\Delta \mathbf{x}\). The improved estimate is then calculated using

We cease iterating when the values of \(\Delta x\) are below a given tolerance

Computing the values of \(\mathbf{z}_i\) using Newton’s method#

We apply Newton’s method for solving equation (3.8). Writing it in the form \(g(\mathbf{z}) = \mathbf{0}\) we have

and the Jacobian matrix is

Note that each of the derivatives \(\frac{\partial}{\partial \mathbf{z}}f(t_n + c_ih, \mathbf{y}_n + \mathbf{z}_i)\) are \(N \times N\) Jacobian matrices so \(J\) is a \(Ns \times Ns\) matrix. To save computational effort we use the approximation

so we only need to calculate a single Jacobian matrix for each step. Using an approximation of the Jacobians in this way means that we may need more iterates, but this his more efficient than having fewer iterates and calculating \(s^2\) Jacobians at each step. Another consideration we have is how to determine the derivatives in the Jacobian. We can approximate the derivatives using a first-order forward-difference approximation

where \(\mathbf{\epsilon}\) is some small value. The Jacobian matrix \(J\) is constant for all iterations of Newton’s method in the same step, so the solution of the linear system in equation (3.12) is well suited to LU decomposition.

3.3.2. Applying an IRK to solve a system of ODEs#

The steps used to solve an IVP using an IRK method are outlined in Algorithm 3.1.

Algorithm 3.1 (Solving an IVP using an IRK method)

Inputs A system of first-order ODEs of the form \(\mathbf{y}' = f(t, \mathbf{y})\), a domain \(t \in [t_0, t_{\max}]\), a vector of initial values \(\mathbf{y}(t_0) = y_0\) and a value of the step length \(h\)

Outputs \((t_0, t_1, \ldots)\) and \((\mathbf{y}_0, \mathbf{y}_1, \ldots)\).

\(nsteps \gets \left\lfloor \dfrac{t_{\max} - t_0}{h} \right\rfloor\)

For \(n = 0, \ldots, nsteps\)

\(J \gets \dfrac{\partial}{\partial \mathbf{y}} f(t_n, \mathbf{y}_n)\)

\(\mathbf{z} \gets \mathbf{0}\)

For \(k = 1, \ldots, maxiter\)

\(g(\mathbf{z}) \gets \mathbf{z} - h (A \otimes I_N) F(\mathbf{z})\)

Solve the linear system \((I - h (A \otimes J)) \Delta \mathbf{z} = -g(\mathbf{z})\) for \(\Delta \mathbf{z}\)

\(\mathbf{z} \gets \mathbf{z} + \Delta \mathbf{z}\)

If \(\| \Delta \mathbf{z} \| < tol\)

break

\(\mathbf{y}_{n+1} \gets \mathbf{y}_n + h (\mathbf{b}^T \otimes I_N) F(\mathbf{z})\)

\(t_{n+1} \gets t_n + h\)

Return \((t_0, t_1, \ldots)\) and \((\mathbf{y}_0, \mathbf{y}_1, \ldots)\)

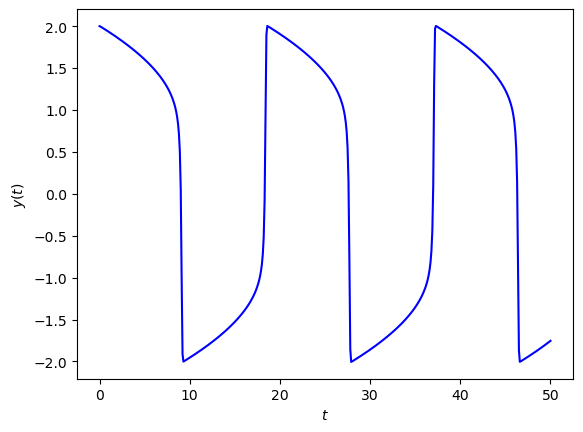

Example 3.4

The van der Pol oscillator is a well known model of an oscillating system with non-linear damping described by the second-order ODE

where \(\mu\) is a scalar parameter that governs the strength of the damping.

Use the Radau IA method to compute the solution to the van der Pol oscillator over the first 50 seconds where \(y(0) = 1\) and \(\mu = 10\).

Solution

We need to rewrite the second-order ODE as a system of first-order ODEs. Let \(y_1 = y\) and \(y_2 = \dot{y}_1\) then

so

The initial conditions are \(\mathbf{y}_0 = (2, 0)\) and \(\mu = 10\) and the Jacobian matrix for the first step is

The coefficients for the Radau IA method are

Using Newton’s method to solve for \(\mathbf{z}\) using zero starting values then

and \(g(\mathbf{z})\) is

Solving \((I_2 - h(A \otimes J))\Delta \mathbf{z} = -g(\mathbf{z})\) for \(\Delta \mathbf{z}\) gives

so the new estimate of \(\mathbf{z}\) is

Here \(\|\Delta \mathbf{z}\| = 0.0564 > 10^{-6}\) so we need to continue iterating. After three iterations \(\mathbf{z}\) converges to the solution

Calculating \(\mathbf{y}_{1}\)

The solution over the full domain \(t \in [0, 50]\) is shown in Fig. 3.1.

Fig. 3.1 Solution of the van der Pol oscillator where \(\mu=10\) using the Radau IA IRK method.#

3.3.3. Code#

The code below defines the functions jac() and radauIA() which computes the Jacobian of a function \(f(t, y)\) and the solution to an initial value problem using the Radau IA method respectively.

def jac(f, t, y):

J = np.zeros((len(y), len(y)))

epsilon = 1e-6

for i in range(len(y)):

y_plus_epsilon = y.astype(float)

y_plus_epsilon[i] += epsilon

J[:,i] = (f(t, y_plus_epsilon) - f(t,y)) / epsilon

return J

def radauIA(f, tpsan, y0, h):

N = len(y0)

nsteps = int((tspan[1] - tspan[0]) / h)

t = np.zeros(nsteps + 1)

y = np.zeros((nsteps + 1, N))

t[0] = tspan[0]

y[0,:] = y0

A = np.array([[1/4, -1/4],

[1/4, 5/12]])

b = np.array([1/4, 3/4])

c = np.array([0, 2/3])

s = 2

for n in range(nsteps):

z = np.zeros(N * s)

F = np.zeros(N * s)

J = jac(f, t[n], y[n,:])

for k in range(10):

F[:N] = f(t[n] + c[0] * h, y[n,:] + z[:N])

F[N:] = f(t[n] + c[1] * h, y[n,:] + z[N:])

g = z - h * np.dot(np.kron(A, np.eye(N)), F)

delta_z = np.linalg.solve(np.eye(N * s) - h * np.kron(A, J), -g)

z += delta_z

if np.linalg.norm(delta_z) < 1e-6:

break

y[n+1,:] = y[n,:] + h * np.dot(np.kron(b.T, np.eye(N)), F)

t[n+1] = t[n] + h

return t, y

This code uses the following NumPy functions:

np.dot(A, B)– returns the matrix multiplication \(AB\)np.kron(A, B)– returns the Kronecker product \(A \otimes B\)np.linalg.solve(A, b)– returns the solution to the linear system \(A \mathbf{x} = \mathbf{b}\)np.eye(n)– returns the \(n \times n\) identity matrixnp.linalg.norm(a)– returns the Euclidean norm of the vector \(\mathbf{a}\)

function J = jac(f, t, y)

N = length(y);

J = zeros(N, N);

epsilon = 1e-2;

for i = 1 : N

y_plus_epsilon = y;

y_plus_epsilon(i) = y_plus_epsilon(i) + epsilon;

J(:, i) = (f(t, y_plus_epsilon) - f(t, y)) / epsilon;

end

end

function [t, y] = radauIA(f, tspan, y0, h)

N = length(y0);

nsteps = floor((tspan(2) - tspan(1)) / h);

t = zeros(nsteps + 1, 1);

y = zeros(nsteps + 1, N);

t(1) = tspan(1);

y(1,:) = y0;

A = [ 1/4, -1/4 ; 1/4, 5/12 ];

b = [ 1/4 ; 3/4 ];

c = [ 0 ; 2/3 ];

s = 2;

for n = 1 : nsteps

z = zeros(N * s, 1);

F = zeros(N * s, 1);

J = jac(f, t(n), y(n,:));

for k = 1 : 10

F = [f(t(n) * c(1) * h, y(n,:) + z(1:N)') ;

f(t(n) * c(2) * h, y(n,:) + z(N+1:end)')];

g = z - h * kron(A, eye(N)) * F;

delta_z = (eye(N * s) - h * kron(A, J)) \ -g;

z = z + delta_z;

if norm(delta_z) < 1e-6

break

end

end

y(n+1,:) = y(n,:) + (h * kron(b', eye(N)) * F)';

t(n+1) = t(n) + h;

end

end

This code uses the following functions:

kron(A, B)– returns the Kronecker product \(A \otimes B\)A \ b– returns the solution to the linear system \(A \mathbf{x} = \mathbf{b}\)eye(n)– returns the \(n \times n\) identity matrixnorm(a)– returns the Euclidean norm of the vector \(\mathbf{a}\)

Note

The solution of the linear system for Newton’s method in the code above was done using NumPy’s and MATLAB’s linear system solvers. In practice, this is done using LU decomposition which is more efficient in this case since the coefficient method is constant for all iterations in a step. I used NumPy’s and MATLAB’s solvers here since have yet to meet LU decomposition which is covered in chapter 6.